The “Audio Visualiser” project was a unique and playful piece of software I created as part of my family’s yearly tradition of crafting surprises for one another. This year, I developed an interactive program for the boyfriend of one of my sisters, whose passion for music and sound inspired the concept. The project became a fun sandbox experience where various game elements reacted dynamically to audio input.

Features and Mechanics

The program utilized the microphone of my laptop to capture real-time audio, interpreting musical notes and voice tones to create visually engaging effects. Key features included:

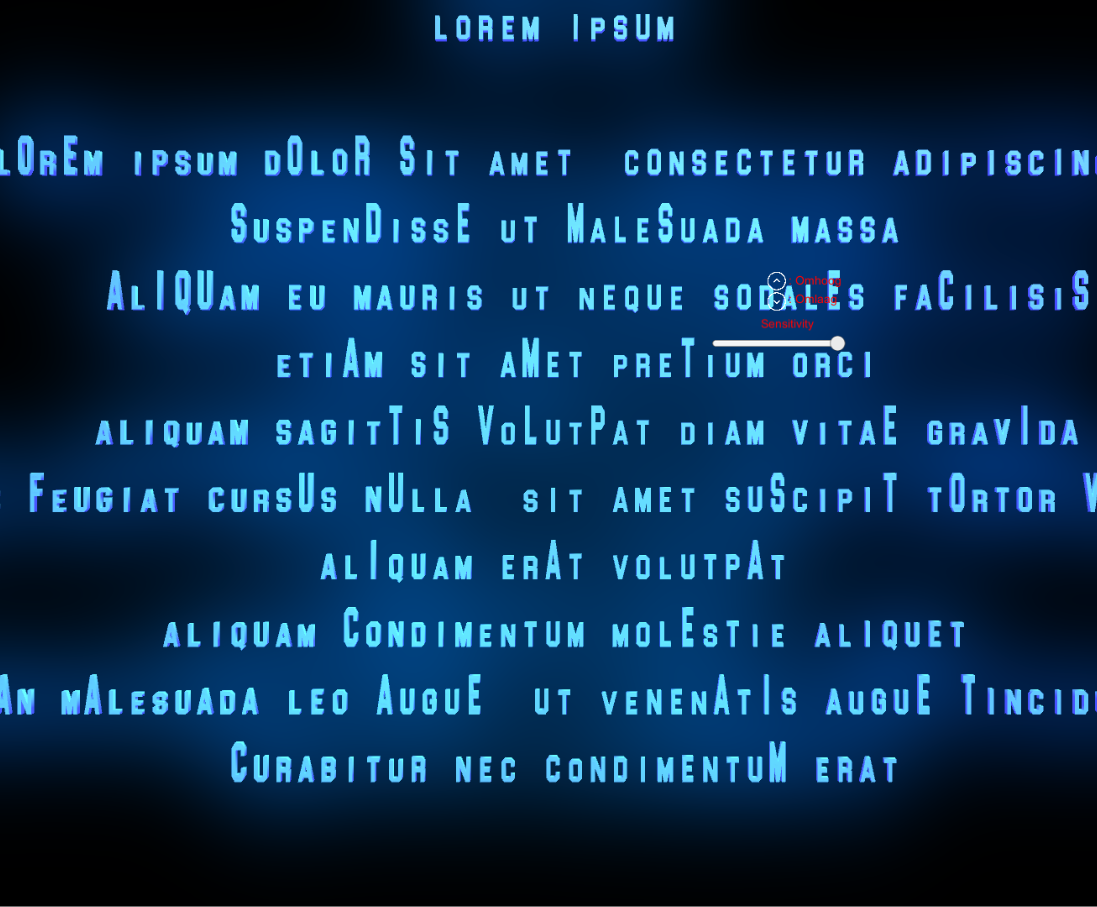

- Interactive Poetry Mode: A custom poem was integrated into the program. Letters “danced” in response to the reader’s voice, with different tones causing varying reactions.

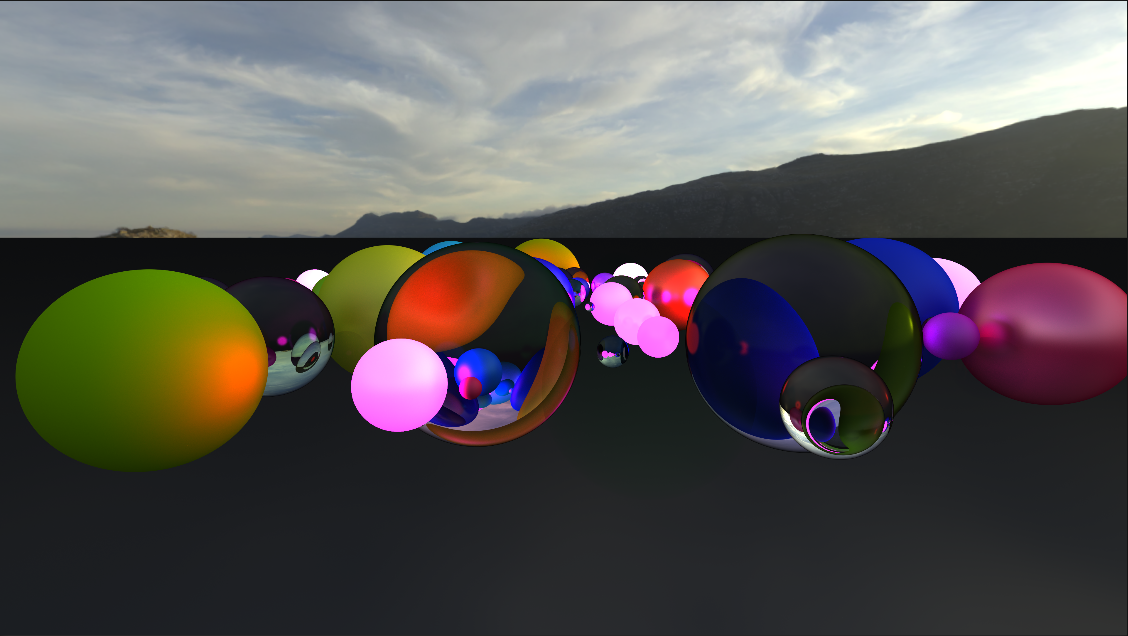

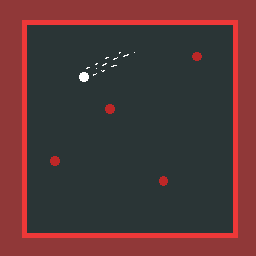

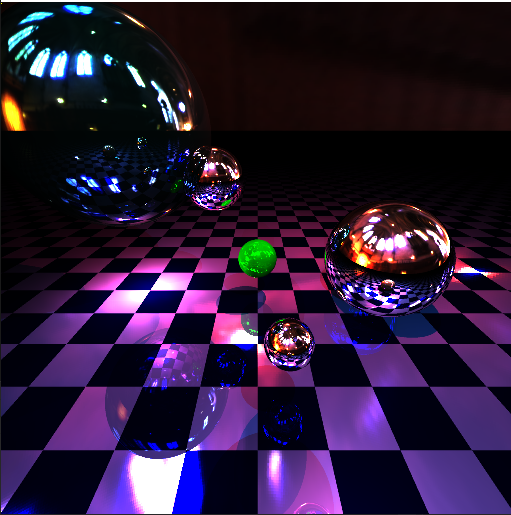

- Sandbox Mode: A physics-based environment where players could explore a box filled with balls, each reacting differently to sound inputs.

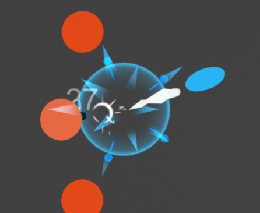

- Audio Visualizer: Musical notes and voice tones were analyzed in real-time, causing distinct objects to respond based on their pitch and intensity.

Technical Challenges and Learnings

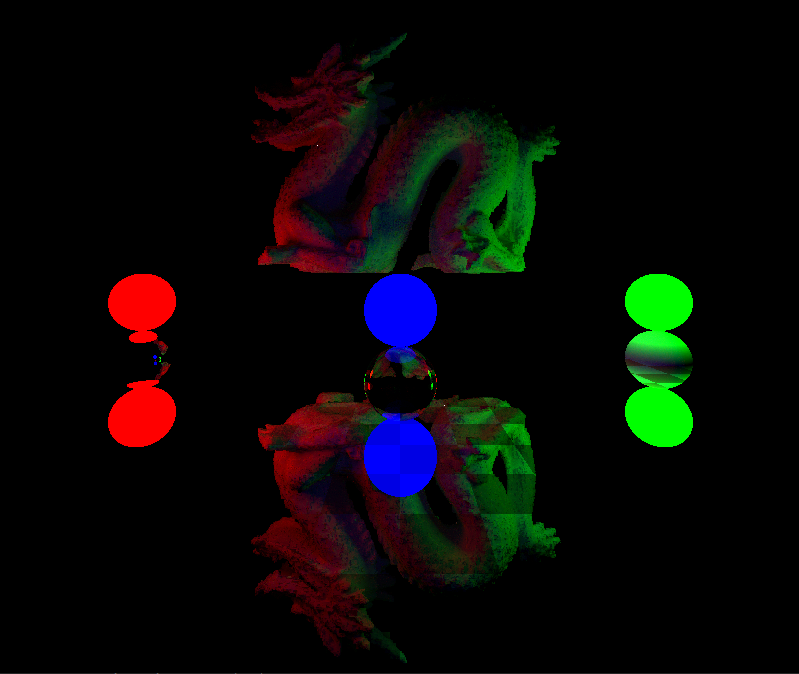

This project pushed me to dive deeper into Unity’s audio systems, prefabs, and shaders, particularly in:

- Capturing and processing real-time microphone input.

- Analyzing audio frequencies to map specific tones to game objects.

- Designing responsive visual effects that enhanced the interactive experience.

Outcome

The end result was a fun and creative sandbox that received positive feedback from its intended recipient. While the project didn’t have a clear gameplay goal, its charm lay in its interactive and experimental nature, showcasing the dynamic interplay between audio and visuals.

You can find the project and its source code on GitHub.